GPT-5's Here: How Does API Pricing Compare With Yesterday's Stars?

Why GPT-5 Mini might be your new cost-effective automation workhorse

GPT-5's budget friendly variants (5-mini and 5-nano) are introduced at pricing that's only a modest overhead over GPT 4.1. In fact, users may even see cost savings thanks to cheaper input token rates.

To the usual backdrop of frenzied anticipation (and speculation!) OpenAI finally released GPT-5 last week - alongside gpt‑oss‑120b (a 120B parameter model of experts open source model).

Much of my waking hours these days seems to go towards engaging ChatGPT in all sorts of fascinating conversations (yes, it's unashamedly my wife and I's go-to-source of parenting advice!).

In the conversational realm, I'm moderately impressed by - although it feels more like an incremental improvement with better grounding and reasoning than it does the game changer that some of the comments in the lead up to its release suggested

What really excites me about AI, however, is not progressively better conversational interfaces which will inevitably reach a kind of zenith.

It's tool-usage, MCP, automation, and the ability of AI to actually help us live better more productive lives and get things done.

This is the frontier part of AI where much is still buggy, UIs are gritty (if existent), and things are "getting figured out". But while tooling and standardizing service access is fast evolving, the LLM remains the pivotal player in the orchestra - responsible now not just for inference, but also for using its newfound toolbox correctly.

For those deploying instructional workflows at scale however, all of this eats up tokens which eats up ... money. GPT5's introductory pricing, however, provides some good value for money with its new budget-friendly variants: GPT-5 mini and GPT-5 nano.

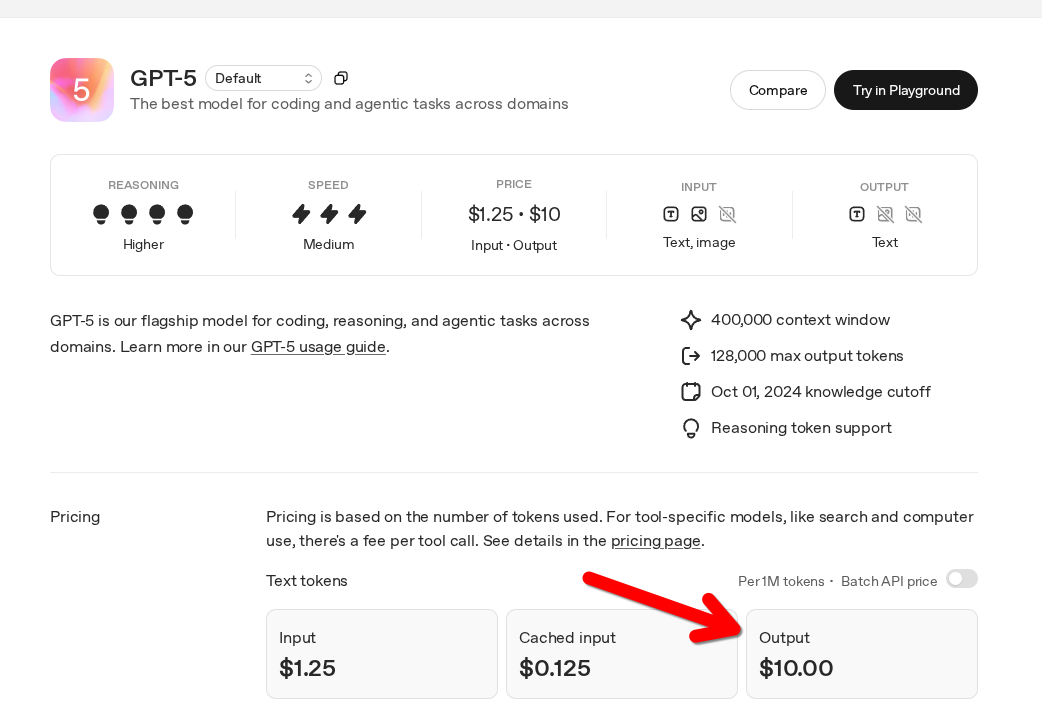

GPT-5 Main Model: $10/1M Output, $1.25 Input

GPT-5 is now OpenAI's everyday "flagship" state of the art offering - with multimodal support, strong agentic and tooling capabilities, and decent reasoning.

It represents an adoption of the philosophy articulated by Sam Altman: The vast majority of use-cases do not require PhD level reasoning. For integrators and developers, however, GPT-5 remains predictably pricing. At $10/1MT it's easy to burn through tokens generating (literal) books with it.

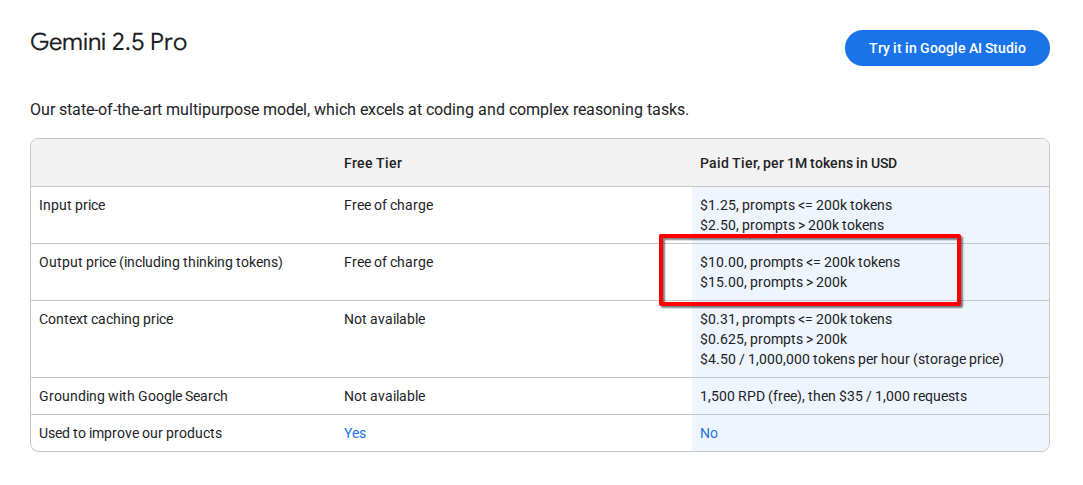

GPT5's pricing also highlights an interesting dynamic in LLM pricing: While the cost of long context prompting seems to be constantly eroding (this dynamic is almost certainly driven in large part by the vast context demands placed by agentic IDEs), the cost of output remains fairly stubborn - at around the $10/1MT price point for state of the art models.

Whatever secret sauce is making context handling progressively cheaper (caching and undoubtedly lots of proprietary wizardry), inference continues to cost money that needs to be passed onto consumers. The differential (between the cost of inference/output and input processing) is remarkably pronounced. Depending on whether or not you factor prompt catching into the equation, inference is about 7X to 10X more expensive on a per-token basis.

This underscores an interesting facet of using LLMs via API: For those very determined people just looking to use models this way to get cheaper access than the built consumer products, it's almost always a losing proposition. For workflow execution, however, the models have huge independent value.

Conclusions for agent-builders and automators?

The cost differential between state of the art and legacy is eroding on input but not yet on output. The higher end models remain useful for top level generations. But caution is still needed to prevent spiraling API bills.

Which makes the best choice for value for money the mini models.

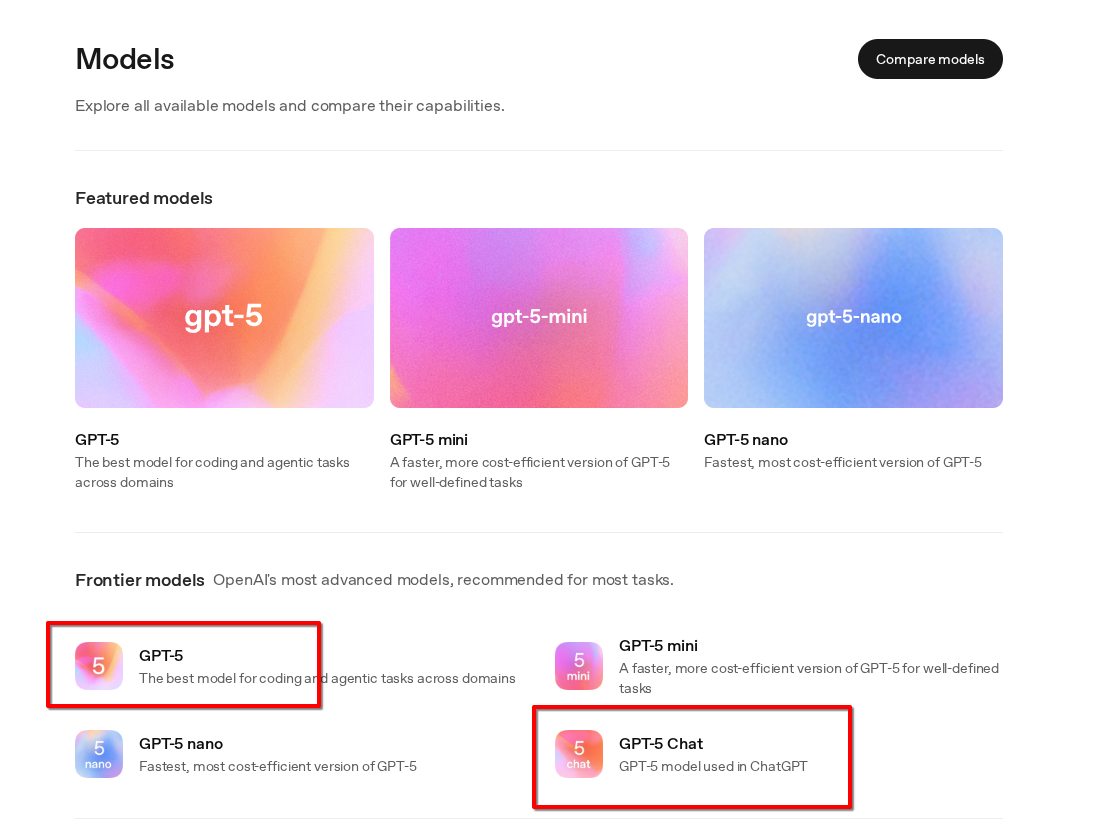

GPT-Chat Is ChatGPT Via API (Kind Of...)

Before we get to the actual pricing of those mini models, one quick observation on OpenAI's API evolution to make in passing.

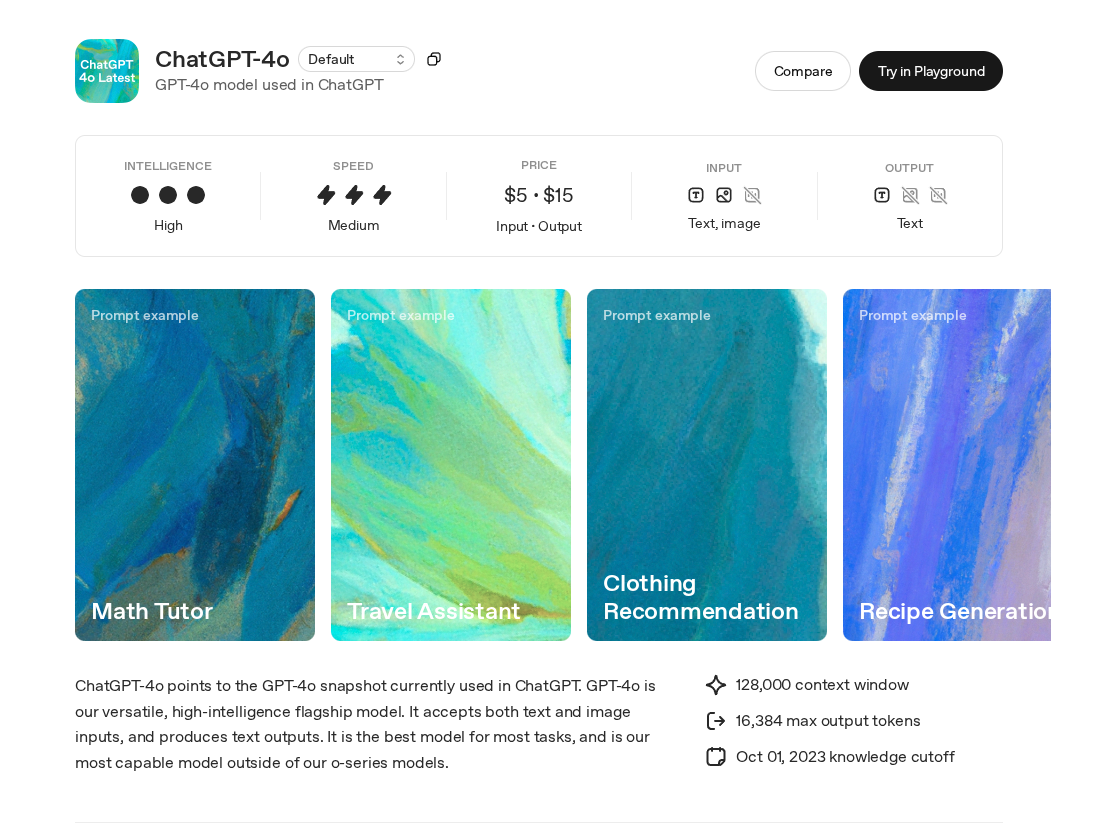

Confusingly, OpenAI have long maintained a model called ChatGPT in their API lineup. This practice seems to have been quietly retired. Up to now, however, it's been accurate to say that ChatGPT is both (technically speaking) the ubiquitous consumer product and a model available for API use.

This is a logical move that aligns with how competitors like Mistral have named their models. It reflects the fact that ChatGPT is a variant of GPT specifically fine-tuned for conversational interactions rather than instructional workflows. But it remains slightly misleading!

ChatGPT (the product) is the composite of many different elements of which the model backing it is just one. others include the system prompting and configurations hidden from users as well as the tooling being integrated into the platform.

So while it is possible to get a head start on building a great conversational interface backed by OpenAI by using this endpoint, it's also misleading to think that doing so will automatically allow you to build out your own custom version of ChatGPT. The updated naming, however, brings appreciated clarity.

GPT-5 Mini And Nano: The Budget Workhorses

In addition to rolling out GPT-5 into ChatGPT (the platform) and making GPT-5 and GPT-5-Chat accessible via the API, OpenAI has released two budget varieties of GPT intended for cost-efficient workflows.

For those integrating the GPT models into anything one can do with the API (a huge list including agents, assistants and workflows) this is the pricing card to pay attention to.

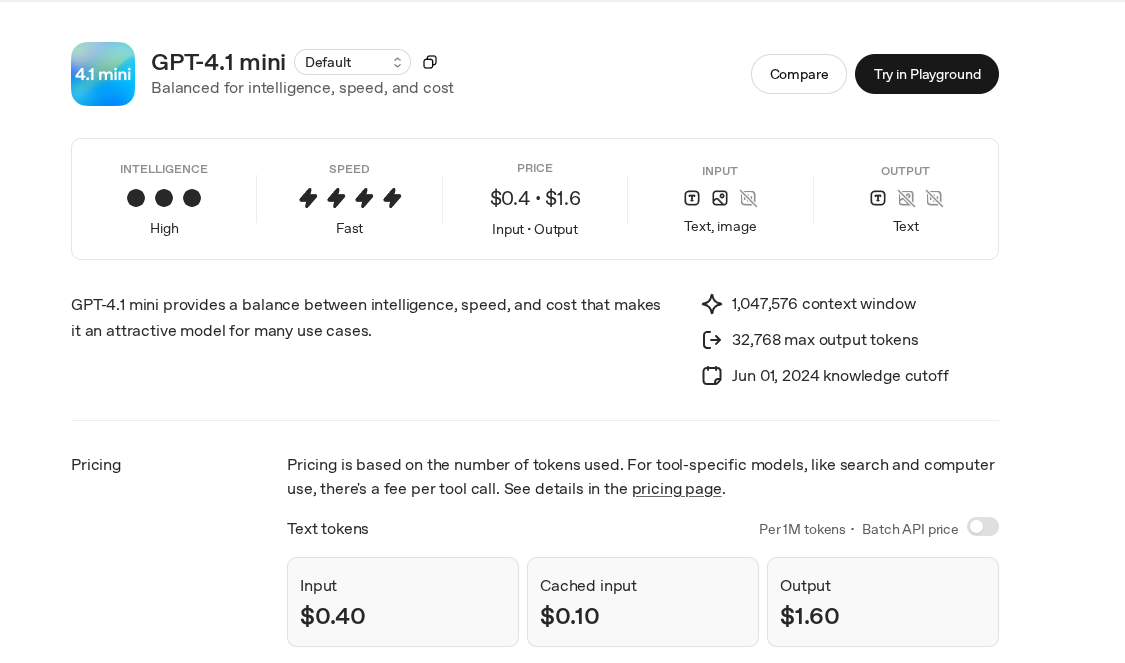

Firstly, here's the rate card for 4.1 mini:

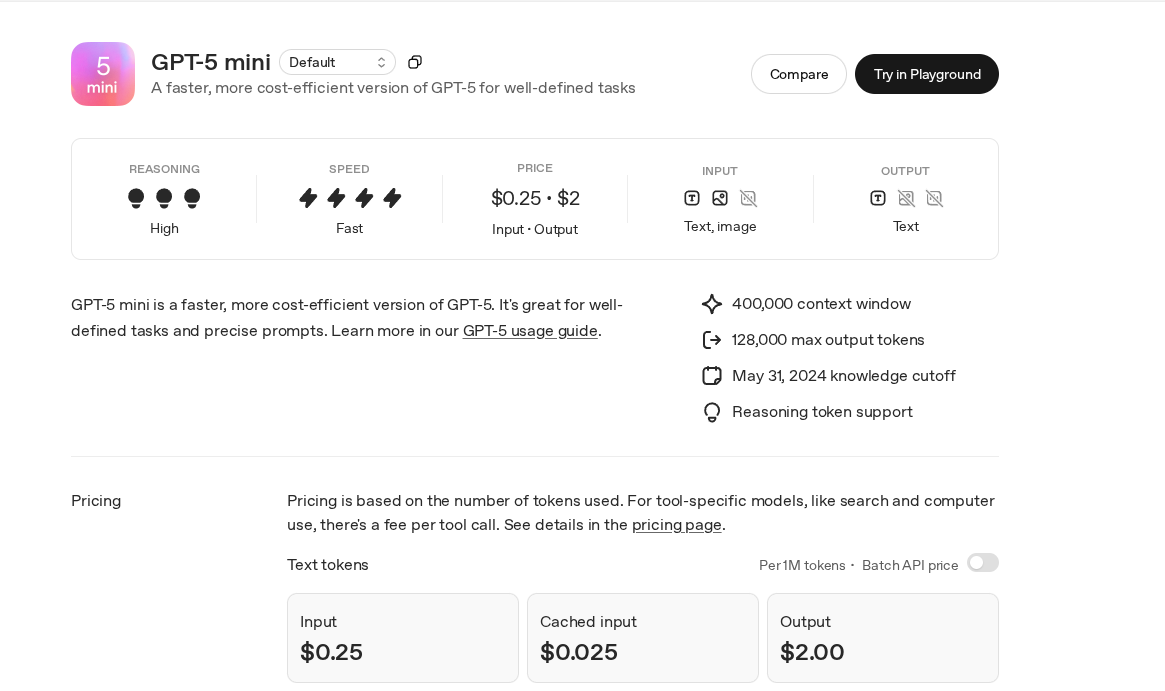

And here is the rate card for the newly released mini version of GPT-5:

Notice what's interesting?

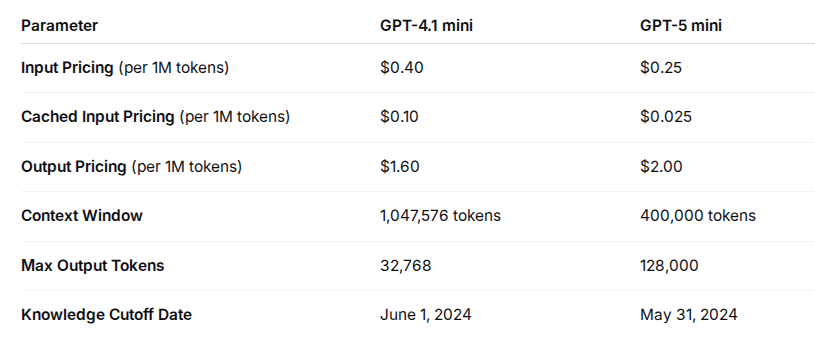

GPT-5 mini's introductory pricing actually means that it has lower input pricing than 4.1 Mini (the cached input pricing is just $0.025/1M Tokens (2.5 cents). By comparison, the cached input rate for 4.1 Mini is $0.10 (ten cents).

The context window is smaller, however. 5.1 Mini has a max window of 400k tokens versus just over one million on 4.1 Mini. If you're using these models in automations and workflows, this context window shouldn't prevent much of a challenge. For certain use cases like industry and code generation, however ... even one million tokens is dismally challenging to work around (hey, code has a lot of words!).

The difference in output pricing in relatively model: at $2/1M Tokens verus $1.6M for 4.1, GPT-5 mini is 25% more expensive. However, unlike 4.1 Mini, 5 Mini has reasoning token support

The knowledge cut-off dates are basically identical (May 31, 2024). That is of course approximately 14 months in the past at the time of writing. Realistically both models are going to require a RAG pipeline (or external data supply by MCP tools) to be able to deliver up-to-date information whether that's required from conversational or instructional workflows.

Key Takeaways

So is it worth paying upgrading workflows, agents, assistants and automations to OpenAI's newest release if they were on 4.1 on 4.5?

My answer is a resounding: "yes."

Both are relatively economical run at scale and 25% is not a huge overhead for upgrading to GPT-5. The added cost of inference is of course also balanced out by the cheaper input cost. In reality, users may notice either no cost difference or even a modest saving by switching workloads over to GPT-5.

Relative to 4.1, GPT-5 develops superior tool uses as validated on benchmarks, as well as better guide rails against hallucinations and superior abilities to mitigate emerging vulnerabilities.

For being able to access a more performant model however this is actually a net benefit!

Sources & References

Automation specialist and technical communications professional bridging AI systems, workflow orchestration, and strategic communications for enhanced business performance.

Learn more about Daniel